I'm refining an article on AI Literacy that explores two angles:

- How application-layer companies can make their products more intuitive.

- How users can extract more value from AI tools by understanding how the systems work.

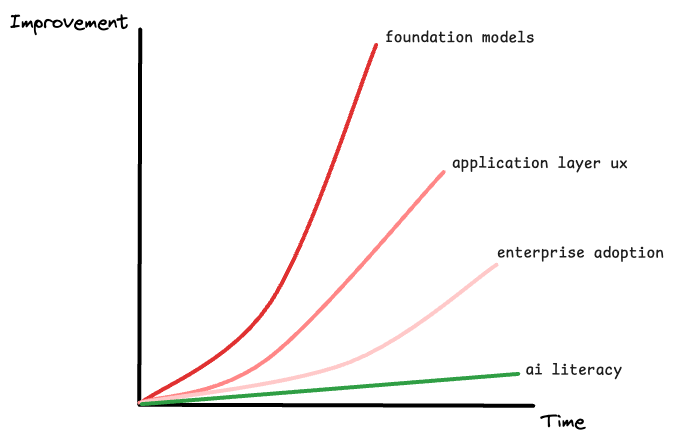

A related concern is that AI adoption is accelerating faster than AI literacy. The result is a widening gap between what businesses invest in employee-facing AI and the ROI they actually realise.

Not everyone in a large organisation will become an AI expert. As the technology keeps evolving, we'll need stronger focus on accessibility, likely through significant investment in education and training as part of a wider AI strategy.

In this article I'll outline what the lifecycle of AI adoption might look like in the enterprise and how we can keep pace with the rate of change.

Table of Contents

- Assumption 1 - There isn't "one" AI strategy

- Assumption 2 - Safety & A11y

- Adoption lifecycle

- Be Realistic

Assumption 1

Each function will craft its own AI strategy (some may need two)

Unlike cloud adoption (the last big enterprise shift), AI roll-outs won't be driven solely by product and engineering. A product team will use generative AI very differently from, say, an operations team.

The most important pillar is safe, flexible access to generative AI, typically through a leading foundation model and a secure chat interface. Once this is in place, AI strategy becomes about allowing individual teams to craft their own strategies.

Assumption 2

Adoption needs to be "accessible and safe" with just enough governance

Businesses must protect proprietary data and customer information, securing guarantees that model inputs/outputs won't leak or feed future training runs. At the same time, teams need freedom to apply AI in ways that work best for them.

These goals feel at odds today, but the tension should ease as model-routing platforms mature and cloud providers educate customers on safe AI usage.

A central strategy team should set guardrails; success comes from individual teams executing their own plans within those boundaries.

Adoption lifecycle

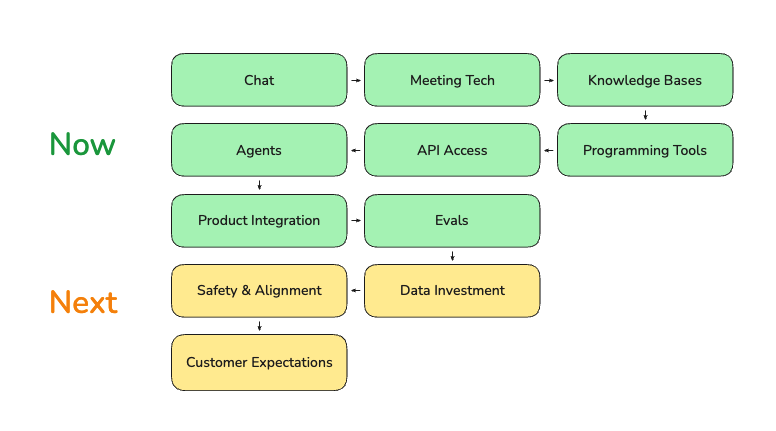

To build a robust adoption strategy, start with a hypothesis about how internal and customer expectations will evolve:

Short-Term Must-Haves

| Stage | What it is | Why it matters |

|---|---|---|

| Chat interface access | Safe access to a top foundation model via chat. | Baseline capability. The "Microsoft Office Suite" of the AI era. |

| Meeting transcription & summarisation | Built-in or third-party. | Saves time, captures knowledge automatically. |

| Institutional knowledge integration | Company knowledge based surfaced inside the primary generative AI tool. | Reduces context-switching; speeds answers. |

| Programming tools | Generative AI code-assist (ChatGPT, Gemini) → agentic IDEs (Copilot, Cursor, Windsurf, Codex, Devin, MCP). | Developers will demand it. |

| API access | Foundation model APIs in the tech stack. | Enables internal solutions and rapid experiments. |

| Agentic access | No-code/low-code composition of AI workflows (longer horizon). | Empowers non-technical teams to automate. |

| Product integration | AI features embedded in customer-facing products. | Becomes a key differentiator. |

| Evaluation loop | Continuous measurement of tool impact (evals, KPIs). | Proves ROI; guides iteration. |

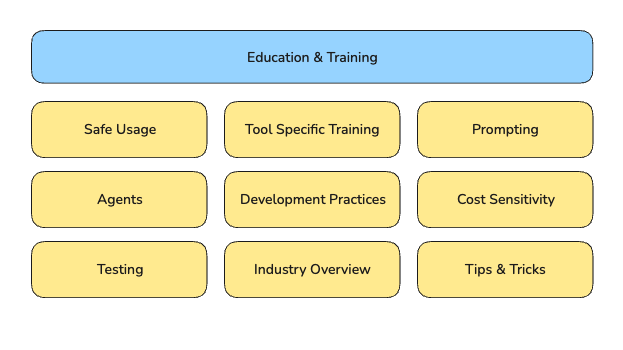

All of these stages need to be underpinned by an ongoing investment in education and training:

| Area | Importance |

|---|---|

| Safe Usage | Prevents leakage of customer and other confidential data. |

| Tool-Specific Training | Employees need to master an ever-growing toolset to stay productive. |

| Prompting | Crafting good prompts is like learning a new language, essential for quality results. |

| Agents | Often a more technical topic; teams must understand orchestration and limitations. |

| Testing | Outputs can't be blindly trusted. Robust validation is required. |

| Cost Sensitivity | Compute/API usage can become expensive; optimisation protects budgets. |

| Industry Overview | Internal SMEs must track the landscape and keep the organisation up-to-date. |

| Tips & Tricks | Sharing best practices lifts overall organisational AI literacy. |

After we've figured out what we need in the short-term (and how to provide the right training), we can start thinking of what might come afterwards.

New Horizons

These areas will require deeper investment, and are best done after you've got a strong evaluation loop proving the ROI of your investments.

-

Increased Data Team Importance: Fine-tuning and context loading become core competencies. Data readiness is more important than ever.

-

Internal AI Safety and Alignment: Organisations will need to consider the safety and alignment of their AI systems.

-

Shifting Customer Expectations: Depending on the industry, customers will start to expect that they can use generative AI to solve their problems.

Be Realistic

Because we're deep in a hype cycle, tools adopted in the next 12-24 months may fall out of favour quickly. Keep your strategy resilient:

- Check for simple answers first: don't overlook traditional or deterministic solutions.

- Leverage your data moat: the competitive edge will come from where your data meets the new tech.

- Don't over-index on adoption: the more real problems you're solving, the better your AI strategy is going. Your KPIs should be tied to value-creation, not how many tools you've tried.

- Design for flexibility: minimize lock-in so that you can pivot when the wave shifts.

- Keep a list of open questions that guide your strategy

- "How much of X will be automated soon?"

- "Does this process need a human in the loop?"

Disclaimer

The ideas and opinions expressed here are entirely my own and do not represent the official stance of my employer or any organisation I’m affiliated with.