I'm currently refining this post. If you have any feedback, please reach out on X

In 2021 I used to think about computer literacy a lot. Growing up I was always in front of a computer but was surrounded by many adults who didn't have a ton of basic computer literacy.

At some point I caught up to my father (who I thought was very computer-literate mind you).

I think this is the story most 30-somethings have about computing.

Growing up internet-native, learning from a parent or older sibling, and then quickly accelerating past them and unlocking your "computer"-chakra.

The same thing is kind of happening now with generative AI but this time I think a lot more people are going to be left behind.

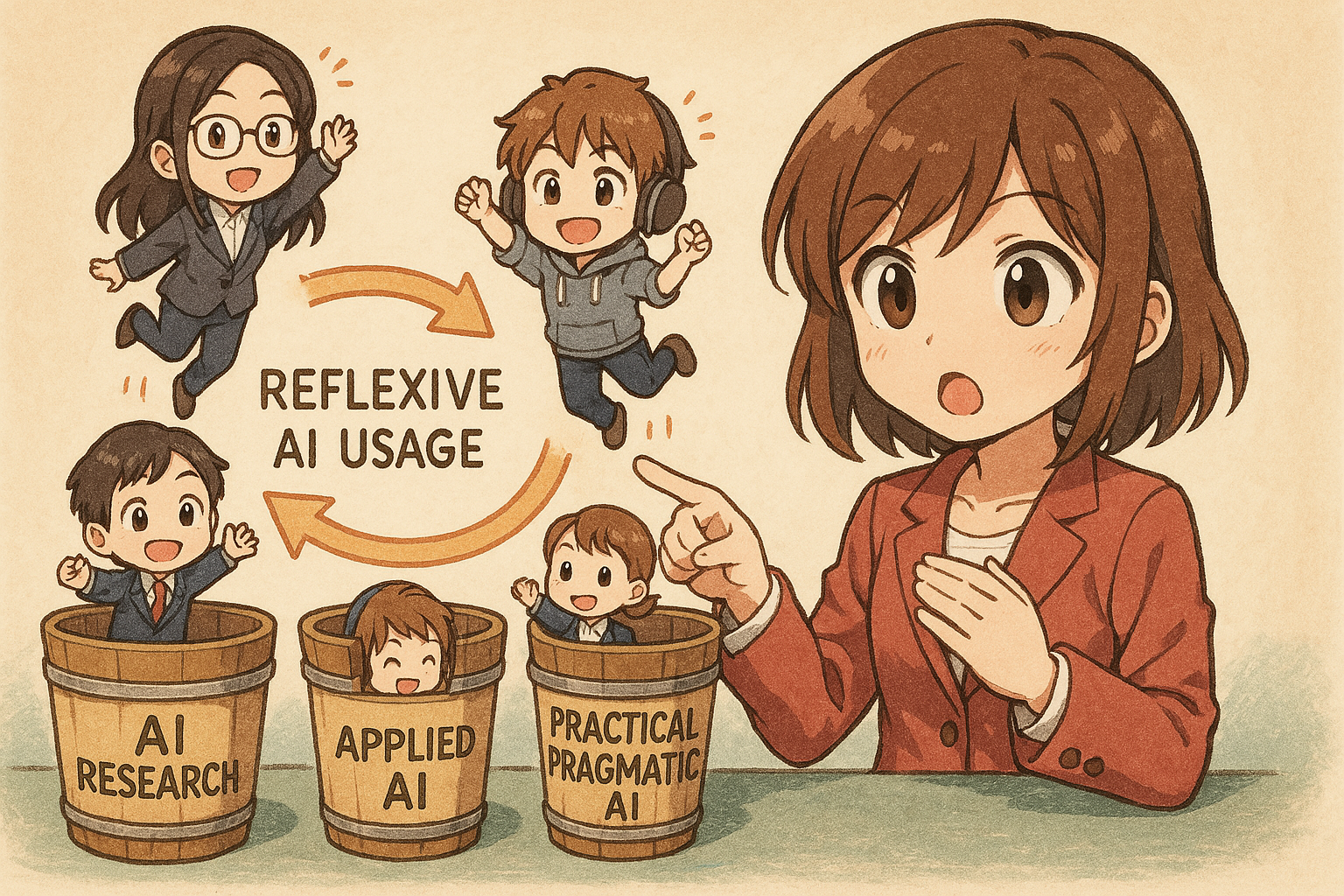

AI literacy is a complicated term. When I think about it, there are three buckets of knowledge:

- AI research

- Applied AI

- Practical or Pragmatic AI

Vibe coding (in my opinion), falls into the Applied AI bucket.

The bulk of the AI literacy problem is in the practical / pragmatic AI usage segment.

The "end users" of conversational AI (irrespective of which product it's embedded into) likely aren't getting the maximum value out of it.

Some companies are starting to say that "AI usage is now a baseline expectation" but most people wouldn't even have a map to understand what effective AI usage even means.

Where does this leave the average user?

We Need To Improve AI Literacy

It's not enough that AI is being made more and more accessible. We need to make sure people know how to use it effectively. Alternatively - research labs and companies in the application layer need to accelerate toward better model UX and ergonomics.

Maybe we don't need to get better at learning AI, AI needs to get better at understanding us

I think this is a valid criticism of the AI research labs and application layer companies.

Why should we get better at learning how to use the products? This isn't B2B SaaS right?

Model UX is a problem that stretches across the foundation layer and the application layer.

The foundation layer is where the research labs are and they should push the boundaries of what's possible, whilst still providing an ergonomic interface for the application layer to consume.

The application layer is where the killer-app companies are (or will be). Killer apps are supposed to be the ones that you "just get", that's part of what makes them killer.

Currently the application layer feels a bit like a really good gadget that you just have to hold a certain way to get the best experience.

In theory, as a consumer of the app layer you shouldn't have to flip something upside down to get the most out of it.

But maybe it's not that simple

You don't have to be an engineer to be a racing driver, but you do have to have Mechanical Sympathy.

Jackie Stewart, racing driver

Perhaps LLMs are a bit like a race car.

If you understand how a system is designed to be used, you'll be able to get the most out of it.

Now, should everyone be an expert in using LLMs? No.

But, I think we can all agree that the current state of application layer UX is not good enough.

Maybe the solution is to give the average user a way to understand the system better, and then let them use it as they see fit.

I think this takes the form of exposing the system prompts to users as well as exposing reasoning more transparently.

This would involve placing more trust in both the user AND the system to be able to handle the complexity, and to not break. But what's the worst that could happen?

What's next?

- It doesn't just come down to "write better prompts". A lot of time prompt engineering results in more semantic noise than you need.

- Give people access to system prompts (where it makes sense). See Horseless Carriages

- Expose reasoning more transparently. Being able to see how a model works through the context you've given it is a powerful tool for learning how to give it the right context.