Daily Notes

I didn't really plan to write a daily note, but during the past week I realised that I should just be doing things I want to do, instead of trying to have a plan or "pathway".

I'll likely write a longer and better explanation of doing what I want to do vs having a plan, but no promises!

Daily notes seem like a nice way to re-train my writing muscle (which is something I want to do), and also give me a chance to reflect on things that happened.

Does a daily cadence make sense? Who knows!

This being the first note, I'm just going to talk about Meta AI and llama3, the stuff I played with today.

- Meta AI

- llama3

- Thoughts on running LLMs locally

Meta AI

There's been a bit of buzz lately about how Meta has added Meta AI to Instagram, Facebook and WhatsApp.

Unfortunately, I haven't been randomly selected for the A/B test on any of my social accounts, so I had a conversation with Meta AI using the web portal.

My first converastion skipped the pleasantries and we got straight into a game of X's and O's. I was surprised by the inference speed it seemed to have out of the gate.

llama3

Off the back of my chat with Meta AI, I figured it was as good a time as any to try to run a model on my Macbook.

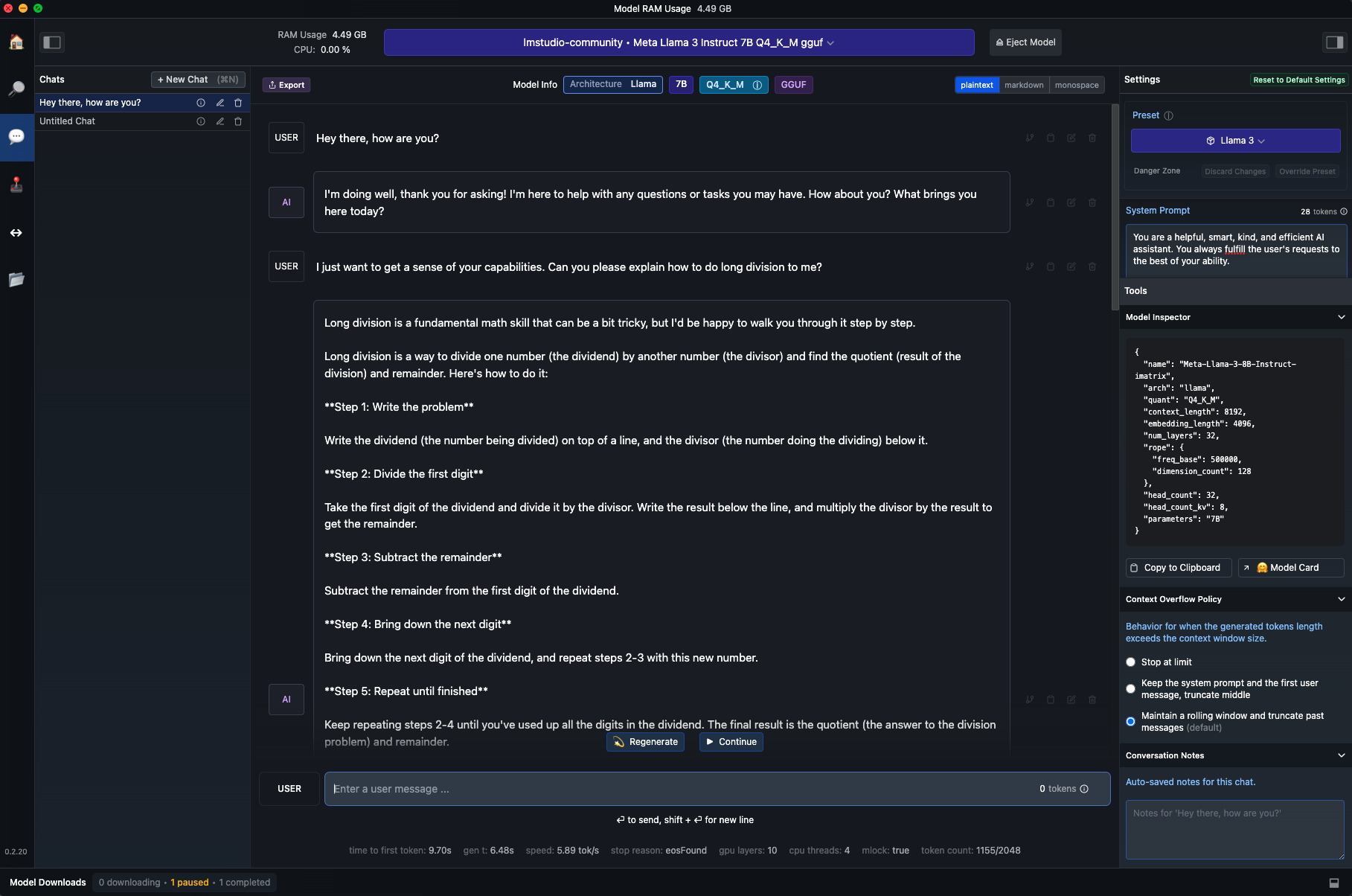

I watched this video from Google, which led me to LM Studio.

It took ~15 minutes to download the 7B parameter llama3 instruct model, and have another quick conversation with it.

The tps (tokens per second), and ttft (time to first token) are probably a bit slower than running the model on better hardware, but not too shabby for a 4gb model running on a 4 year old M1 MBP!

Running models locally

Being able to run LLMs locally and easily (especially with LM Studios "server" mode) should make prototyping really cheap and accessible (save for my SSD being filled with .gguf files).